It’s already been a week since I got back from New Orleans, but the memories are still strong.

The smells of Bourbon Street and the French Quarter perhaps a bit too strong – but the sights were amazing, the conference was intimate and inspiring, and the Louisiana gulf red fish (two nights in a row!) made it a worthwhile trip on the food alone.

People are still warming back up to travel and in-person conferences. TK was a bit smaller than past years, but the intimacy made for real connection. There wasn’t a traditional Expo with booth after booth of vendors. Instead, a few focused stands where people could talk to (mostly) tech and tool companies. The lunches were lunch & learns, hosted by different tech/tool providers who then had to try and present a session over a ballroom of people talking and eating.

Best thing of all? Connecting

and reconnecting with L&D peers from all over the place. I’d name all the

names, but that just feels like pure name-dropping 😊

Here are my session

recaps from two days at ATDTK23:

TUESDAY –

February 6, 2023

Opening

Keynote – Limitless: Supercharge Your

Brain to Learn Faster and Remember More (Jim Kwik)

Touted as a “world-renowned brain coach”,

Jim Kwik is on a mission to help people get more out of learning and

productivity. He's

got a popular podcast - no sponsors/ads - democratizing the info. Each podcast

is 20 minutes (keeping it short so we can focus).

https://www.jimkwik.com/

(go to “more” to find the podcast link)

The forgetting curve. Within two days of first exposure, we lose 80% of what we learned.

- How do we maintain emotional buoyancy or resilience?

- How do we stay focused with so many distractions?

- How to activate or engage a stagnant mind?

Two big dips in our minds/memory - when we graduate and when we retire.

Information + Emotion = Long term memory retention

“When the body moves, the brain grooves.”

Information Fatigue Syndrome (Information anxiety). People are drowning in information but starving for the skills to keep up and get ahead

Meta learning -- learn how to learn = the art and science of learning how to learn

Your

ability to learn is the ultimate competitive advantage.

Kids graduating now could have 14+ CAREERS in their lifetime…things are changing so rapidly.

BE FAST (use this to help you learn/focus better!)

B = Belief.

If you believe you can or you can't, either way you're right. All behavior

is belief driven. A belief is something you hold true. In order to create a

new result, you need a new behavior. And you need a belief that even says it's

possible. "You'll see it when you believe it." Instead of saying I don't have a great

memory, say I don't have a great memory…yet."

E = Exercise. Not necessarily physical exercise, but that does help. Exercise is practice and practice makes progress. Common sense is not common practice.

F = Forget.

Your mind is like a parachute and it only works when it's open. If your cup s

full, there's no room for more information/knowledge. Many of us with 20 years

of experience have one year of experience that we've practiced for 20 years.

Get into zen, beginner's mind. Forget about what you know bout the subject so

you can learn something new. Also forget about distractions. You're not multi

tasking, you're TASK SWITCHING. The

human brain can't do more than one thing cognitively at one time. Why you

should task switching 1) Task switching costs you time - to go from one to the

other, you have to refocus yourself. 2) It also creates mistakes. 3) it takes

your energy; uses more brain glucose. Trying to do too much at the same time.

Forget about what you know about a subject and forget about distractions.

A = Active.

People don't learn by pushing information inside their heads. Don't learn

thorugh consumption - we learn best through CREATION. How can you make your

learning more active? Learning is not a spectator sport. Some people take

notes. Handwriting notes actually scores better than digital notes. Because you

can't handwrite as fast as the guy can speak, it forces you to be active.

Jim Kwik notetaking methods (google

that) - capture/create - take notes on the left side (capture) ;on the right

side you make notes (create) - how am I going to use this, what questions do I

have?

S = State.

Your emotional state. State regulation.

Emotional resilience. You control how you feel.

Take regular brain breaks. Move. Hydrate

(30% better focus when we're hydrated?).

Breathe. The bottom 2/3 of your

lungs absorb most of the oxygen. If you're diaphragm is collapsed, you don't

get as much focus, etc.

The Pomodoro Effect. We can stay

focused for about 20-25 mins. Work in shifts then take brain breaks.

Have a "to feel list" -

here are three ways I want to feel today. (alongside your to do list)

Two most powerful words = I AM (then

whatever you put on it)

You don't have focus, you do it. You

don't have memory. You do it.

Take your energy back. You don't

have motivation, you follow a process.

Purpose, energy, focus, small simple

steps. That's how you motivate yourself.

T = Teach.

Fastest way to learn is to learn it with the intention of teaching someone

else.

"The Explanation Effect" - when you learn something with the intention of explaining it to someone else, you learn it better.

To better remember names: Remember MOM. (People don't care how much you know until they know how much they care. People will forget what you said, but they'll always remember how you made them feel.)

- M = motivation. (why do I want

to remember this person's name? if you can't come up with a reason, you

won't remember)

- O = Observation. It's not your

retention, it's your ATTENTION. Listen --> scramble those letters and

it spells silent. We often don't listen well because we're trying to

figure out what we want to say.

- M = methods. Plentiful methods out there. Subscribe to his free podcast. It will cost your attention, that's it.

*** Lots of great tips and ideas in this really active session. He had us moving and grooving, for sure. Probably some rehash of core concepts for most L&D professionals, but always good to refresh. I did buy his book Limitless – because who doesn’t want fewer limits in life?

____

Learning Data for Analytics and Action (Megan Torrance, Torrance Learning)

Data and analytics – it’s a mashup of two things - like chocolate and peanut butter - they go better together!

xAPI

= a way to manage and store large amounts of data

Analytics = what you DO with that data

Book to check out: Measurement Demystified (David Vance and Peggy Parskey) 2021

Why

do we measure learning development?

In the poll the audience rated their top answers as: assess gaps; evaluate programs, monitor results, establish benchmarks

xAPI

= experience API (application programming interface)

xAPI

is free; but it does require tools that are not free.

It doesn't DO anything, but it's how we talk about what it does.

SCORM

= time, score, location, status, answers (low barriers to entry, interoperable

data specification).

The data that's interesting about our leadership programs should be different than the data that's interesting about our safety programs, etc. But we've got one set of data to review for everything in our LMS via SCORM - it's a limited vocabulary. SCORM is a vocabulary.

xAPI is more like grammar. Action verb object results content. It's more like LEGOs. You can mix and max - you follow the same grammar, but you can mix and match.

You can feed the open text into a semantic tool to then get the "vibe" of the comments.

You can do a lot of useful things with xAPI - deliver and track outside of the LMS; track offline training, plus a bunch of other stuff. Megan says, you can do all of this without xAPI, but it's not recommended.

xAPI drips data - activity statements - into the Learning Record Store (LRS), the official storage place of xAPI. xAPI is the plumbing.

"Learning Analytics is the science and art of gathering, processing, and interpreting data and communicating results…designed to improve individual and org performance and inform stakeholders." (Learning Analytics book)

Reporting = sharing regular and routine information vs. Analytics = answering exploratory questions

Framework

for using data (it's not just about analytics):

- archive

(store and secure, credentialing)

- analyze

(descriptive, diagnostic, predictive, prescriptive)

- act on (recommend learning, personalize & adapt learning,

Energy management - as an instructional designer, you don't have to go off and get a masters in Data Science. But you need some data fluency, data literacy (federal reserve board has some courses on data science). Your job as a learning professional is essential to those data scientists. The data scientists need to work with the learning team, otherwise they don't get the learning bit - they need us to tell them. The DS should be asking "tell us which of these things is interesting to you."

If you don't get IT involved in this, you will fail.

Most

orgs start at the top and work downward to deeper questions:

- Descriptive

- what happened? Check out the activity stream

- e.g., the total # of interactions per users. Math & statistics

- Diagnostic -

why did this happen? (ask an upfront question about

why this program is important to you…why this matters - you can't get that

data out of SCORM!) - then compare that to what they answered at the end

and what changed….Statistics

- Predictive -

what will happen? If you see trouble happening,

you can change that course. The

Traffic Light Dashboard - Learning Guild article. Data science.

- Prescriptive - how can we make X happen? - Data science & machine learning

She mentioned Ben Betts Learning Maturity Model (I’m not finding a link to the article, but links to a bunch of webinars Ben did) - benchmark yourself against other orgs

Getting started

- Make a plan

- Define questions

- Gather & Store data

- Analyze & iterate

- Visualize and share results

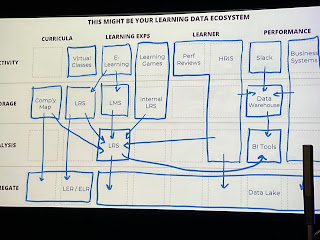

Map

out your learning data ecosystem - activity, storage, analysis, aggregate.

The value of the questions you ask has a HUGE impact on the value of your analysis:

- What does the organization

need? What are we curious about (the business, the learning team, the

learner)

- What do evaluation frameworks

suggest to us? She shows using Thalheimer's LTEM model.

- Where is the data to answer our

questions?

- Form hypotheses and analyze -

exploratory analysis vs. hypothesis testing

- Gather and stores your data - capture and send activity data (your elearning authoring tool can probably do this, custom code, other business tools); receive & store the data (an LRS); analyze the data (if you have a tool that has a reporting function, pull into into an aggregated tool - LRS's often have analytics tools - that interesting layer of graphics); Downstream analysis and visualization tools (Tableau, Power BI; etc - the powerful tools the rest of your org is already using)

The LRS can also give data BACK to the learning experience. (With SCORM, data cannot be shared from course to course, but you can do that with xAPI).

How

do we communicate it?

Pretty

charts and graphs! Check out books like "Show Me the Numbers"

This is how you make your case to get the budget, the access, the permission to do that next layer of analytics.

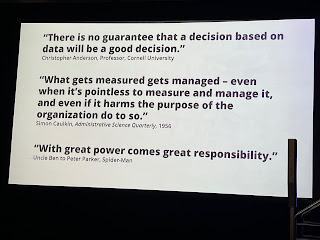

Megan topped things off with her favorite quotes about data:

____

Best Practices in Instructional Design for the Accidental Instructional Designer (Cammy Bean, Kineo)

This was my session!

We talked about Learning Pie and the well-rounded learning professional. What’s your sweet spot? Was it Learning, Creativity, Technology, or Business expertise that brought you to ID? What’s your weakness and how can you build your skills in that area?____

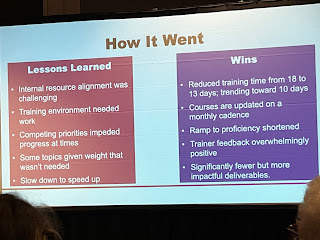

Using Rapid Development to Reduce Training Time & Design Debt (Cait Quinn, Sirius XM)

Case study

about Cait’s experience with her team to streamline and mature their production

process. This was a great session with a lot of hands-on, tactical ideas for

learning teams struggling with rapid timelines and rapidly changing product offerings.

The

team:

Global

remote team supporting international contact centers

3 IDS, 1 Walk Me Specialist, 1 Learning Technologist

Learners:

·

answer phones or chat conversation

(Agents)

·

18-24 years old

·

English is second or third language

· Last year trained 15,000 people

Trainers:

Trainers

teach different classes; they are SMEs turned trainers; may have weak

facilitation skills

2020 had to shift to virtual classroom where had been all ILT.

The

feedback/the challenge:

·

"Class is too long"

– general feedback that training was too long

·

"Training is inconsistent"

- Training was inconsistent across trainers. Materials were out of

date/inconsistent. Trainers downloaded content locally and then never went back

for updated versions

·

"You take too long to

deploy" - Storyline courses for all elearning content was taking

too long

·

Multiple nuanced versions of courses

created backlog (e.g., pricing changes)

How did they fix this?

1. Create a culture of collaboration

Built

a "design system" in Rise

- Created a library of content that

any designer could use

- Documented how to create a good

piece of learning material - personas, how to use their logo, how to publish

out to LMS correctly

- Loaded all the fonts in to Rise

- Using Rise, contractors and

designers could all use it

- Built presentation and handout

templates in Google slides

- Got buy in from the whole team

because they owned it

- Templates eliminated the overload of

choices that you have when you have a blank canvas

- (YES - this is maturity -

establishing process and governance)

Set up internal cloud based sharing

system

- IT switched from MS Office to Google

Workspace

- Team loads everything to Google

Workspace

- Set up an Articulate Rise Team

- Also set up Workspace for trainers

(they can view but not download)

- Cloud-based video development (Powtoon)

NOW: The team owns the work, not the

person. No single knowledge holder, lower stress, higher flexibility.

2. Modernize the Classroom

- Elearning focuses on high level concepts

- Classroom debrief and activities (live virtual classroom)

- Reduced courses from 3.5 hours to 30 minutes – time savings across the board

- Converted from Storyline to Rise - Rise is easy, develop more quickly, although limited design choices.

- Their partner call centers decide if they're doing ILT or VILT.

- They are not translating content - English is the language of their business

- When they moved to Rise, it was easier to bring in contract producers to help create courses

- Combined facilitator guides and slides

- One slide presentation with everything in it - trainer friendly slide notes

- Eliminated simulations that they had in place as assessments

- For systems training, no longer assessing clicks. The risk is less than it used to be. They decided they were testing all the wrong things. Don't need to assess that you clicked this button.

- Instead you need to learn to listen to customer and respond appropriately - set this up as scenarios - customers says x, where would you go next?

- Implemented Walk Me and a training environment for practice…..

3. Iterate your Development

- Committed to monthly releases of training materials

- Monthly release call with trainers to show what's changed

- Established a regular feedback loop

- Created a form for trainers to submit feedback

- Small changes can be updated the next day; bigger changes get batched for monthly release cycles

- Became OK with imperfection

- Now can make changes on the fly if needed

- Use sprints as opportunities to launch and test

- Engaged internal SMEs (instead of the training/ID team assuming they were the experts)

____

An Introduction to Evaluating Usability in Immersive Technology (Jennifer Solberg, Quantum Improvements Consulting)

Usability = the extent to which an interface is easy/pleasant to use. It’s about making sure your solutions provide value. And in the world of immersive technology, it turns out it’s also about making sure the experience is NOT “barfy”).

Usability is just one factor to look at: also consider whether it’s useful, valuable, desirable, accessible, credible, findable. (see Morville’s User Experience Honeycomb).

Immersive training experiences (e.g., the kind with “goggles”) is great for training because it’s highly emotional and evocative. The way you feel about the experience matters. If it’s unpleasant, it will sit on the shelf.

Note that what’s usable for one person is not necessarily usable for someone else.

The Five F’s of Usability: Fit, Feel, Form, Function, Future Use

Usability is not a one time analysis when it’s done. Instead, check usability at frequent points through the dev process. You want to show IMPROVEMENT over time.

Consider

your testing protocol. Have user stories. Then have people speak what they’re

doing out loud. If they get stuck, watch them struggle, then provide a little support.

If they’re still stuck, then you step in. Ask “What did you expect to happen

when you did that?

Using immersive tech can improve accessibility for some, but for others it might be a nightmare.

Do your demographics first. Plot out the distribution of people and their concerns. Do we need to account for this?

When

testing, let people warm up to the equipment – it can be a lot. Let them calm

down before they really start the training experience. (From personal

experience, I know that the first 5-10 minutes of wearing googles is about me

bumping into walls and falling off bridges, etc. Give people time to adjust!)

Day

2 Keynote – Panel Discussion Building Your L&D Team's Data and Analytics

Acumen

·

Warner

Swain, Business Dev Manager, Barco - projectors, virtual classroom video

technology company - WeConnect Technology

·

Anthony

Auriemmo, Total Rewards, Workday & People Analytics Leader @ Capri Holdings

Limited (Versace, Jimmy Choo, Michael Kors) - organization pysch background -

15,000 employees worldwide

·

Yasmin

Gutierrez, Sr. Manager of Learning Experience @ WM (formerly Waste Management).

50,000 employees. Yasmin works on technology, learning platforms and the

evaluation piece.

-------

Everyone

on learning team should have basic understanding of technology. "It's

everyone's responsibility to understand the technology that you use."

ATD

Capability model (released Jan 2020) was used at WM to understand where the

team's capabilities stacked up and where people needed development…created a

baseline to understand where the team was. WM is part of the ATD Forum and able

to talk to peers; go to conferences - continue to understand what technologies

are out there. Creating a network around us to learn from each other, our

peers.

Employees need to understand the technology that they’re using - and not every role uses the tech in the same ways.

Lots

of collaboration with the L&D team and the Technology team. It needs to be

an early collaboration.

WeConnect

pumps out a lot of data – people want to know that Anthony left the class

early, Yasmin raised her hand 3 times, etc.

Analytic

Acumen - what do we do with all this data that these systems are collecting?

At

WM - it's about partnership - Yasmin was the only analytics person, now she has

analysts, she works with the HR Tech Team - and a people analytics team with a

data scientist, and great vendor partners they work with. They get data from

all these sources, they put together a story and share that out.

6

month program on leadership. They were measuring monthly - not all the way to

predictive analytics. But building consistency of measurement. This high

impact, high visibility program - monthly measuring to see where need to

adjust. E.g., they noticed people weren't meeting with managers as they went

through the program - so they adjusted the program to build in those

checkpoints.

Collecting

baseline retention to see how the team was doing and how valuable the program

was to keep retention.

"It

takes a village" - we don't have to know it all. But important to identify

our gaps and get help in those areas.

Analysts

gather the data and do cleanup, then the senior analysts gather the insights

and make recommendations, then go to graphics team to tell the story visually.

Start

with - Is this data accurate, was it entered right? Then look for insights.

Then turn the data into something meaningful.

Capri

did their first Employee engagement survey in October. Noticing that employees

with less than 2 years tenure are more engaged than those who've been longer

than 2 years. But we don't yet know what the actual driver is to understand

what we're looking at. Need to be responsible in the use of statistics. Partner

with the data analytics team to craft the story; then sense check that story

with leadership. Need to pick up all the pieces and make sure we're telling the

whole story.

"You

don't look at data for answers; you look at data for more questions."

Anthony Auriemmo

Once

you start looking at the data, more questions come, and more people get excited

about looking at the data (so you get more senior buy-in).

Data

helps us bring objectivity to the decisions that we make, so we are more

informed and make better decisions.

Analyze

the date - is it accurate? Is it meaningful? You need to see context in terms

of data to interpret it better. E.g., if only 8 out of 100 people completed an

assessment and 95% got that question wrong, then that data may not be that

useful.

People

are consumers of data and have their own biases. We've gotten dashboard happy

and everyone wants to see the data right away. But we need someone with data

savvy-ness to interpret that data.

When

telling the data story - know the audience that you're telling the story to.

Don’t overwhelm the audience with data - everyone doesn't need 20 pages of

insights. Think about the level of the audience and the level of detail that

they need.

Data

Analytics has ranked as the lowest capability - WHY?

L&D

teams are so focused on the learning and learning sciences part and our

backgrounds are typically not data. Measurement and data needs to be part of

the design of the solution.

How

can we leverage all of this data to make better decisions?

Analytics

has often been segmented into its own silo - e.g., a People Analytics Team. We

often don't give people outside of the data analytics the opportunity to access

and use the data, so we keep those silos and make it more segmented.

Be

aware: Sometimes people's data doesn't match - e.g, one team measures turnover

one way, the other measures turnover another way…

____

Helping Learners See What You’re Saying: Designing with Animation for e-Learning (Tim Slade, The eLearning Designer’s Academy)

Animation is motion with meaning. It shouldn't simply be decorative. Animation - when used well - can help you focus on what you need to focus on.

Use

animation to direct the learner's attention:

- Instead of telling the learner

on every slide to click next to continue, you can do a soft pulsing glow

around the button to draw attention to the BEGIN button

- An animation can take you from

point A to point B and show you the transition (e.g., in Rise when you

click the START button, you see the transition to a menu - this helps

orient the learner to what's going on and puts the elements on the

screen/menu into context)

- This is low-level animation use that will make your courses more intuitive, elegant.

Visualizing

concepts:

- Animation can bring meaning to what otherwise would be static content

Influence

Behaviors

- Peak level of animation - to

help learners DO something different

Bonus: